Because We’re Living Through an “Infodemic”

By Stephen C. Rea, PhD (Colorado School of Mines), Colin Bernatzky (University of California Irvine), and Sion Avakian (University of California, Irvine)

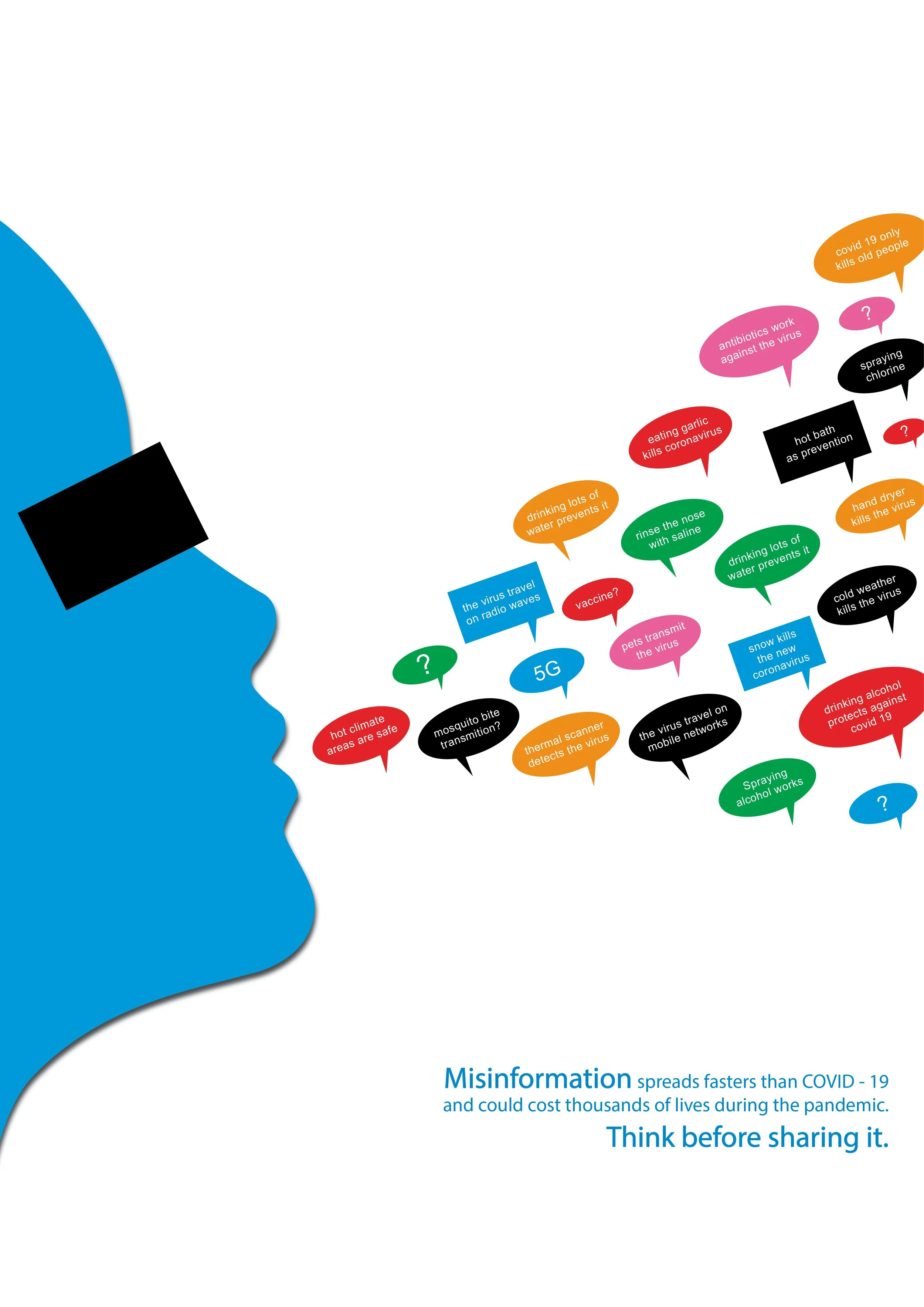

In February 2020, about a month before COVID-19 became an inescapable reality around the world, the World Health Organization issued a warning about another, related danger: an “infodemic.” As conspiracy theories about the origins of COVID-19, the severity of its threat, and possible treatments circulated on social media, WHO officials cautioned that spreading false and misleading claims would make the work of combating the virus and its spread that much more difficult. They urged Silicon Valley’s Big Tech companies—especially social media platforms like Facebook, Twitter, YouTube, and TikTok—to remove or flag content about COVID-19 that wasn’t based on science. Many have done so in the months since. However, once disinformation starts spreading online, it quickly takes on a life of its own.

“COVID-19 is just one of many recent crises that disinformation campaigners and other digital extremists have taken advantage of to sow chaos, destabilize the news media ecosystem, and mobilize individuals and groups to their causes.”

COVID-19 is just one of many recent crises that disinformation campaigners and other digital extremists have taken advantage of to sow chaos, destabilize the news media ecosystem, and mobilize individuals and groups to their causes. From presidential elections, to civil rights movements, to public health programs, extremists look for opportunities to spread inaccurate or outright manufactured information, manipulate media coverage, and further their own agendas. Increasingly, they rely on digital tools like social media, online forums, and “do-it-yourself” image, video, and audio production to accomplish their goals. Following the violence at Charlottesville, Virginia’s “Unite the Right” rally in 2017, UC Irvine’s Office of Inclusive Excellence launched “Confronting Extremism,” an initiative “dedicated to understanding the ideas and behaviors advocated far outside of alignment to the campus values for social justice and equity in today’s society.” As part of that initiative, we have developed a collection of six self-paced teaching modules titled “Confronting Digital Extremism” that we hope will not only raise awareness of extremists’ “digital toolkits,” but also inspire effective means of confronting extremism online.

About the Modules

Source: United Nations COVID-19 Response

Modules 1 through 4 explore digital extremism through four different “lenses”: “Case Studies in Disinformation” distinguishes disinformation from propaganda and misinformation, and encourages students to explore how what they encounter in their social media feeds may be radically different from the content someone else sees; “Trolls and Extremists” analyzes the similarities and differences between online trolls and digital extremists and their historical development; “Algorithmic Exploitation” digs into some of the specific tools that digital extremists use to exploit social media and online search tools’ affordances, and lets students experiment with social media recommendation algorithms; and finally, “Toward a New Digital Civics” proposes ideas for a curriculum devoted to the rights and responsibilities of online citizenship in the context of disinformation and extremism.

Left: two members of The Base, a far-right extremist paramilitary hate group, wearing face masks to conceal their identities. Right: an example of everyday mask usage during the COVID-19 pandemic

In response to the rapid spread of misinformation, cyberhate, and virtual extremism unleashed by the COVID-19 global pandemic, we subsequently added two new modules that aim to contextualize the perils and pitfalls of the digital landscape in this unique historical moment. Module 5 explores how pandemics and similar crises have historically fueled processes of racialization, othering, and stigmatization, examining how these processes have been both exacerbated and constrained through social media in the age of COVID-19. Module 6 delves into the increasing cross-pollination between white supremacists, science denialists, militia groups, and other factions that have been thrust together on- and offline by the pandemic. In this final module, we outline the underlying appeal of conspiratorial content and review strategies to curb the current infodemic and restore our digital ecosystem.

How We Envision the Modules Being Used

The modules may be used separately or in conjunction with one another. We hope that the lessons—in part or in whole—can be integrated into high school, undergraduate, and graduate curricula across a range of subjects, from communications, to political science, to literature. In addition, these modules can be used to educate teachers, public health professionals and concerned citizens seeking to inoculate themselves against the infodemic.

Student Perspective of Modules & Learning Activities

“Although neither misinformation nor our cognitive biases are new phenomena, their convergence during a time of high connectivity has concerning—and destructive—effects.”

The modules can be used to strengthen our sense-making apparatus in a time when we seemingly cannot rely on institutions that have traditionally been trusted authorities. When once-reliable sources mine clicks and technology giants bank on outrage to harvest attention, steering clear of misinformation grows in complexity. The lessons and activities herein are guides for developing an intuition of dis- and misinformation trends, navigating and interacting with the digital platforms, and confronting our own biases, which might be useful factors when choosing the next movie we watch but turn into destructive forces of reinterpreting reality when channels are flooded with fear, uncertainty, and doubt. Although neither misinformation nor our cognitive biases are new phenomena, their convergence during a time of high connectivity has concerning—and destructive—effects. The modules expose the ease with which dis- and misinformation spread, and thus are part of an early warning system alerting us to our highly connected society’s deficiencies in defending against such threats. Amidst the torrent of bots and bad faith actors, it is important to remember that social media are just that—digital tools that are inherently social. As such, social science has a critical role to play in understanding the allure of misinformation, mitigating the scope of the infodemic, and re-assessing what "civics" means for participation in digital ecosystems.

Further Reading

STEPHEN C. REA is a sociocultural anthropologist whose research focuses on digital culture. COLIN BERNATZKY is a PhD candidate in Sociology at UC Irvine whose research explores the social determinants of vaccine hesitancy. SION AVAKIAN is Computer Science Undergraduate at UC Irvine.